AIxCC Series - Introduction

Part I

It’s been a while since something got me as excited as DARPA’s AI Cyber Challenge (AIxCC) did this year at DEF CON. Probably since DARPA’s last big hacking spectacle: the Cyber Grand Challenge (CGC) of 2015–2016.

Back then, symbolic execution was the drug of choice. Seven teams, dozens of people, two years of obsessive work—pioneering fully automated systems to find, exploit, and fix vulnerabilities in binaries with no source code.

I wasn’t a competitor, but the addiction got to me too. For nearly two years I built fuzzers that used symbolic execution to push code coverage deeper, into the dark corners where bugs breed. I even wrote an IDA Pro plugin, called Ponce, to do symbolic execution right inside IDA’s UI—submitted it to the IDA Pro Plugin contest, and won first prize 🎉. At the time I was leading a small security team at Salesforce, hunting for vulnerabilities in third-party applications. Some of those finds, once properly weaponized in the shape of an exploit, became a different avenue in our Red Team engagements.

A Short Detour: What Symbolic Execution Really Is

I used to explain symbolic execution like this: imagine translating a program into a giant system of equations—yes, the kind from high school math. The variables (x, y, etc.) are bytes you can control. Each assembly instruction becomes a symbolic expression, which lets you reason about whether a branch (jz, jnz…) depends on input you can manipulate. If it does, you can explore both branches—critical for thorough fuzzing.

It’s powerful, but not without problems:

Very slow — Converting every assembly instruction, even library ones, is very costly. A way to overcome this is to selectively apply it where traditionally guided fuzzing stalls. Another one is to create a single symbolic expressions on known

libcAPI (printf, puts, fopen, …) instead of one per every single instruction withing them.Symbolic indexing — Arrays with symbolic indexes can produce unrealistic paths.

Path explosion — Branches and loops multiply execution paths exponentially, quickly overwhelming resources.

You can find more information on symbolic execution in one of my old posts.

That’s why, even with its promise, symbolic execution never dethroned guided fuzzing as the industry’s go-to technique when finding vulnerabilities. The best tech is the one researchers actually use, not the one that just looks sexy on paper. Some AIxCC teams didn’t even use symbolic execution in their systems.

Enter AIxCC: The 2025 Upgrade

AIxCC is, in a way, CGC’s spiritual successor—but with a different high. This time, DARPA’s drug of choice is artificial intelligence. It makes sense: in 2016, AI was barely making headlines; now, it’s the most powerful, widely available technology humans can access.

The rules also mark a major shift:

Source, not binaries — Teams work on open source projects, not mystery binaries.

No exploit required — Finding the bug is enough; no need to weaponize it.

Two challenge types:

Full-scan — Search the entire project for vulnerabilities.

Delta-scan — Focus only on changes from a specific commit.

Harness-reachable only — Bugs must be discoverable through existing test harnesses.

And one more thing: teams must open-source their systems. That means the rest of us can dig in, learn, and experiment.

Where This Series Goes Next

If CGC proved that fully automated bug finding was possible, AIxCC could redefine how it’s done. This isn’t about replacing humans; it’s about amplifying what’s possible—finding and fixing more bugs, faster, and at scale.

At DEF CON, during a roundtable with Matt Knight and Dave Aitel, both reflected on their time supporting AIxCC and shared what they hope comes next. Matt Knight summed it up: “The work starts now.” Dave Aitel added a challenge of his own: “analyze the results, figure out why 33% of vulnerabilities were missed, see what the top teams did differently, and learn from it”. That mindset will shape the rest of this series.

Over the next few entries, I’ll:

Better understand the challenge rules

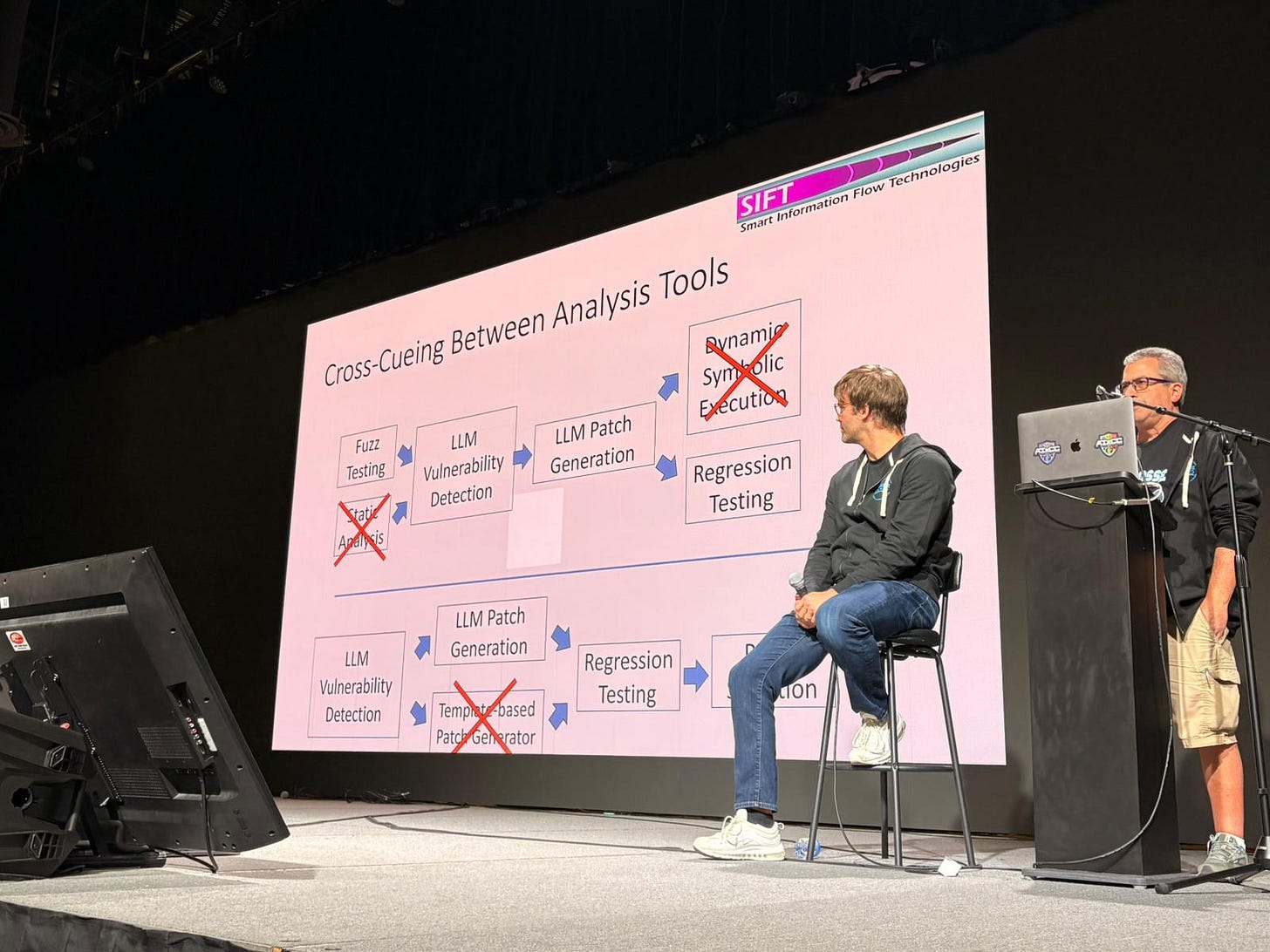

Break down the finalists’ different approaches.

Compare results across full-scan vs delta-scan.

Look at the role of sanitizers in triggering bugs.

Trace where the most (and least) vulnerabilities came from.

Link to and explore the released code.

I’ll be distilling hours of talks, documents, and source code into something you can follow over coffee rather than over weeks of digging. And I’ll be learning alongside you.